"Discovered – currently not indexed": 10 proven techniques to fix it

Stuck with "discovered – not indexed" pages? Get your pages indexed and drive more traffic with these simple techniques used by SEO experts.

Updated May 23, 2025

AI Summary

After hours of work, you've created the perfect webpage. You've optimized keywords, designed layouts, and polished content. Still, your page is nowhere to be found. Is Google playing a trick on you?

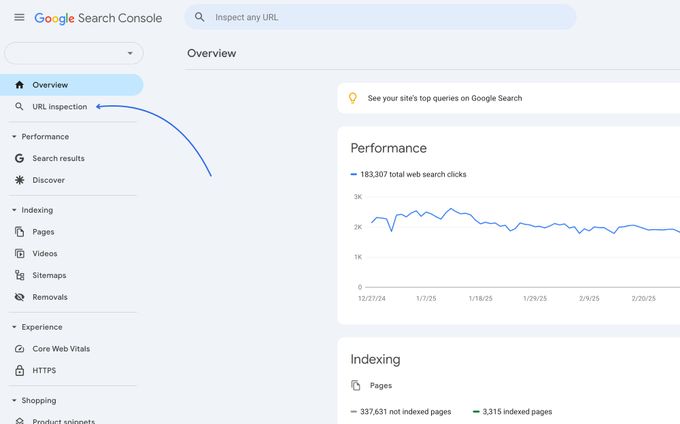

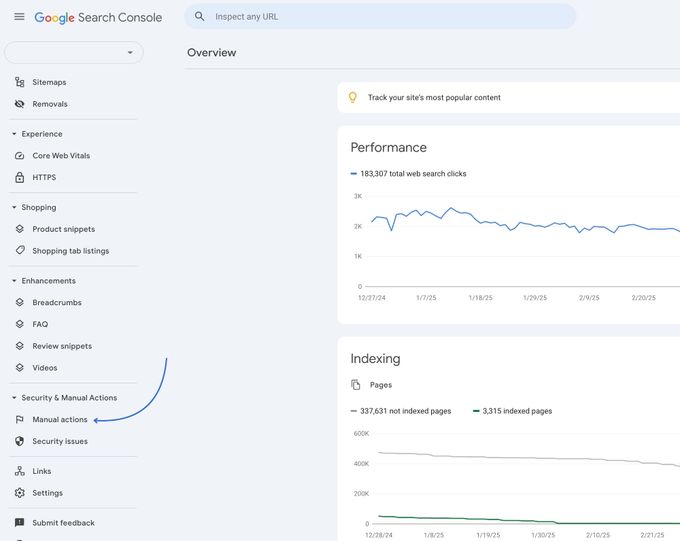

You go to Google Search Console and inspect the URL only to find that Google has marked it as "discovered – currently not indexed." So your page exists, but it's trapped in limbo: invisible to users, ignored by search results, and silently draining your SEO efforts.

Every day a page remains unindexed is a missed opportunity. Competitors rank higher. Organic traffic evaporates. Leads vanish. Without answers, frustration grows. In this guide, we'll share tried-and-tested techniques to break your pages out of indexing purgatory.

» Want help fixing "discovered - currently not indexed"? Book a free consultation.

Key takeaways

- "Discovered - currently not indexed" means Google knows a page exists but hasn't crawled or indexed it yet.

- This happens due to low-quality content, poor internal linking, crawl budget limits, or technical issues.

- Techniques to get pages out of this status include requesting indexing, improving content, and solving technical issues.

- Use Google Search Console to track indexing and optimize regularly.

What is "discovered – currently not indexed"?

The "discovered - currently not indexed" status in Google Search Console means that Google has found a URL on your website but hasn't crawled or indexed it yet. While Google knows the page exists, it hasn't processed it to include it in its search results.

The implications of "discovered – currently not indexed" on your website

The status is not inherently bad, but whether it becomes a problem depends on the scale and context:

Normal scenario: This status can be temporary for small websites or new pages. Google may need more time to crawl and index the pages. If you see only a few pages affected, it's usually not a problem.

Problematic scenario: If you see that many pages remain in this state for long, it could mean you have issues with:

Content quality: Pages with low-quality or duplicate content may not meet Google's indexing threshold.

Internal linking: Poor internal linking can make it harder for Google to prioritize crawling certain pages.

Crawl budget: Larger websites can exceed their allocated crawl budget, preventing Google from indexing all URLs efficiently.

Technical issues: Overloaded servers or incorrect configurations (e.g., robots.txt settings) can block Google's crawlers.

"Discovered – currently not indexed" vs. "crawled – currently not indexed"

The main difference between "crawled - currently not indexed" and "discovered - currently not indexed" lies in Googlebot's interaction with a webpage.

When you have a URL labeled as "crawled," it signals that Google has already visited the page, analyzed its content, and checked if it's suitable for indexing.

Despite this visit, it has decided not to include the page in its search index because of low-quality or duplicate content, technical issues, poor site structure, etc. This status means that Google has processed the page but labeled it unsuitable for indexing at this time.

On the other hand, "discovered - currently not indexed" means that Google has found the URL, typically through a sitemap or links from other websites, but has not yet crawled the page.

Google is aware the page exists and intends to crawl it eventually, but it hasn't prioritized it at the moment. It may have delayed it due to crawl budget limits, server overload, or perceived lower priority of the page.

Here's a summary of the key differences between "discovered – currently not indexed" and "crawled – currently not indexed":

| Status | Google's action | Reason for exclusion |

|---|---|---|

| Discovered – currently not indexed | URL identified but not crawled | Crawl budget issues, server overload, poor links |

| Crawled – currently not indexed | URL crawled but not indexed | Content quality, duplication, domain authority |

10 techniques to fix "Discovered – currently not indexed"

1. Request indexing manually

Google's automated crawlers prioritize pages based on perceived importance and freshness, but some URLs may slip through the cracks. Manually requesting indexing is a direct way to prompt Google to reevaluate the page.

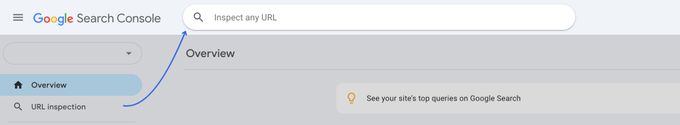

To do this, navigate to the URL Inspection Tool in GSC.

You'll see that the system directs you to the textbox at the top. Paste the target URL, and hit enter.

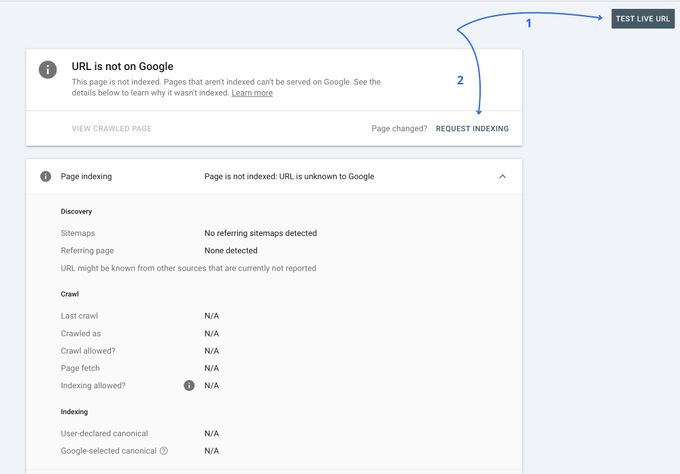

You'll now end up on the URL inspection page. Next, click Test Live URL.

If it detects no critical errors, select Request Indexing. This tool provides quick feedback on crawlability and highlights issues like server errors or robots.txt blocks.

Note: Use this feature carefully. Google limits manual requests and may penalize you for too many requests. Use this method for high-priority pages, such as product launches or time-sensitive articles.

» Learn how to stay ahead of the changes coming to Google Search.

2. Audit content quality

Low-quality content is a common reason for non-indexing. Google's algorithms prioritize pages that offer value, depth, and relevance. It deprioritizes pages with minimal text, duplicate product descriptions, or AI-generated content.

You can use tools like Copyscape to identify duplicate content and Grammarly or Hemingway Editor to improve readability. You should also ensure your page targets search intent—expand sections with examples, data, or multimedia (images, videos, infographics).

For example, a 300-word blog post might lack sufficient detail compared to a 1,500-word guide with step-by-step instructions.

Additionally, avoid keyword stuffing and ensure your content aligns with Google's E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) guidelines, especially for YMYL topics like health or finance.

Tip: You should establish yourself with thought leadership content written by subject matter experts. This kind of content is easily quotable and attracts links from external websites.

3. Strengthen internal linking

Internal links are pathways for crawlers to discover pages and signal their importance. Orphaned pages (those with no internal links) are less likely to be indexed.

You should audit your site's structure using tools like Screaming Frog and find pages with few or no inbound internal links. To strengthen internal linking, link to the non-indexed page from high-authority pages like your homepage, blog posts, or pillar content.

Note: Use descriptive anchor text for your calls to action. Avoid vague anchor text like "click here." You should also update your old posts with links to newer, relevant content to disperse authority.

» Learn to drive users towards purchases using the SEO conversion funnel.

4. Submit/update XML sitemap

An XML sitemap is like a roadmap for search engines. It lists all critical pages and their metadata. You should ensure your sitemap is dynamically generated (using tools like Screaming Frog) and includes indexable URLs.

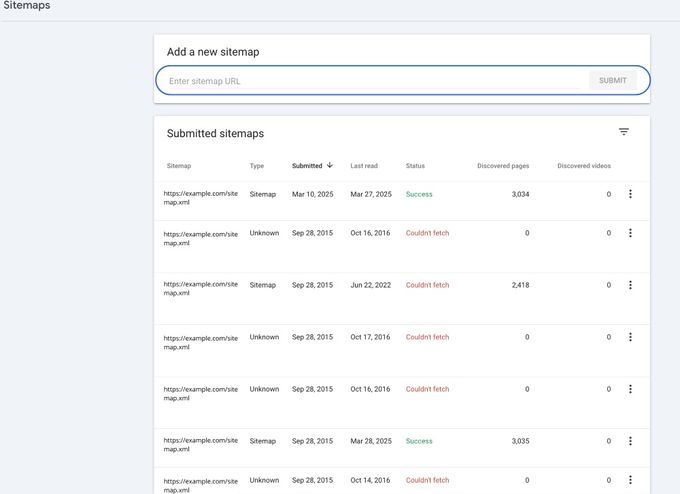

You can submit the sitemap via GSC under Indexing → Sitemaps and monitor the Sitemaps Report for errors like blocked URLs or 404s. If you have a large website, split the sitemap into smaller files (e.g., by category) to avoid timeout issues.

Note: While Google's John Mueller has said that sitemaps don't guarantee indexing, they help crawlers prioritize pages—especially on sites with complex navigation or new content.

» Convert users based on their intent with the SEO marketing funnel.

5. Optimize crawl budget

Crawl budget is the number of pages Googlebot crawls daily on your site. Large or poorly structured sites may exhaust their budget on low-value pages like filters or session IDs.

To optimize your crawl budget, you can:

Improve site speed: Use Google PageSpeed Insights to identify bottlenecks like render-blocking JavaScript or unoptimized images.

Fix crawl errors: Resolve 4xx/5xx errors in the Coverage Report to prevent wasted crawls.

Block low-priority pages: Use robots.txt or noindex directives for non-essential pages like admin panels or duplicate tags. For example, an e-commerce site with 10,000 product pages should block crawlers from indexing faceted navigation URLs (e.g.,?color=red&size=large).

» Check out the top SEO goals to target in 2026.

6. Resolve technical barriers

Some technical issues can prevent Googlebot from accessing or rendering a page. Here's what they are and how to address them:

Server errors: Check the Coverage Report for 5xx errors and work with your hosting provider to resolve downtime or resource limits.

Robots.txt blocks: Test URLs in the URL Inspection Tool to ensure they're not accidentally disallowed.

JavaScript/AJAX content: Ensure critical content (text, links) renders without JavaScript. Use Google's Rich Results Test to check for renderability.

Core web vitals: Improve loading performance (LCP), interactivity (FID), and visual stability (CLS) to meet Google's thresholds.

» Learn more about the Google Crawl Stats report.

7. Verify canonical tags and redirects

If you have misconfigured canonical tags, they can confuse crawlers by suggesting that a page is a duplicate of another.

For example, if https://example.com/page and https://example.com/page?utm_source=fb both have self-referencing canonicals, Google may index only one. You can use tools like Screaming Frog to audit canonicals and ensure they point to the preferred version.

You should also avoid redirect chains (e.g., Page A → Page B → Page C), as they slow down crawling and dilute authority. Implement 301 redirects directly to the final destination.

» Explore techniques to get better ROI from SEO.

8. Improve page authority

Google crawls and indexes pages with higher authority (via backlinks or internal links) faster. Get high-quality backlinks by creating shareable content like research studies, tutorials, or tools.

For example, a well-researched "2025 SEO Trends Report" could attract links from industry blogs. You can also promote the page via email newsletters or social media to drive organic traffic, which signals relevance to Google.

Tip: Ensure your site's navigation menu or footer links to the page if it's a key service or product page.

9. Check for manual actions or security issues

Google may exclude pages if your site has a manual penalty, such as for spammy links or security issues with hacked content. In GSC, you can navigate to Security & Manual Actions to review warnings.

Note: If you're penalized, remove toxic backlinks using the Disavow Tool and submit a request to reconsider. If your website gets hacked, clean the malware, update passwords, and request a security review in the Security Issues report.

» Explore our list of the top SEO KPIs to track this year.

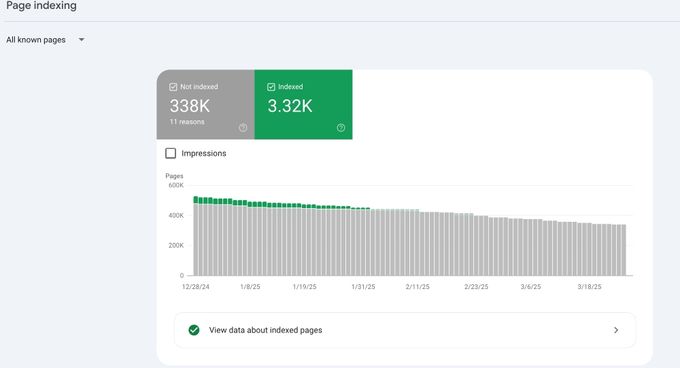

10. Monitor indexing progress

Track the progress of your page using GSC's Index Coverage Report and URL Inspection Tool. Allow 1–2 weeks for Google to recrawl the page.

For persistent issues, try:

Recrawling triggers: Update the page's content or metadata to prompt a faster crawl.

Server log analysis: Use tools like Screaming Frog Log File Analyzer to verify if Googlebot is accessing the URL.

» Improve your CTRs and conversions with SEO A/B testing.

Take your pages from "discovered" to "indexed"

The "discovered – currently not indexed" status in Google Search Console is a common challenge. It shows how important it is to align your website with Google's priorities.

No single fix guarantees immediate results, but combining the techniques above and constant monitoring improves your chances of getting your pages indexed.

Ultimately, Google's goal is to index pages that deliver value to users. By eliminating technical roadblocks, creating authoritative content, and streamlining crawl efficiency, you align your site with these priorities.