Google's Crawl Stats report: A guide to monitoring your site’s crawlability

Explore all there is to know about Google Search Console's Crawl Stats report—from the key metrics and what they mean to how you can leverage this data for SEO.

Updated May 23, 2025

AI Summary

Crawl stats are the metrics in Google Search Console's Crawl Stats report that indicate how Google crawls your website. Monitoring this report is key for maintaining site health, identifying issues, and optimizing your SEO strategy. Let's explore the importance of Google's Crawl Stats report, break down key metrics and statuses, and discuss how to use this data to enhance your site's performance.

Key takeaways

- Finding a correlation between the Crawl Stats report and your traffic is almost impossible.

- The main value of the Crawl Stats report lies in the responses you get.

- Use the Crawl Stats report to identify and fix issues affecting your site's crawlability.

What is Google Search Console's Crawl Stats report?

Google Search Console's Crawl Stats report provides insights into how Google crawls your site, including the number of crawl requests made, response times, and any technical issues encountered during the crawl. It also teaches you about the health of your domain and website.

If you know how to read the report correctly, you can use it to improve your website's SEO and get more traffic. You can see what type of response Google gets from your site, whether your pages are functioning correctly, and how many pages Google can access. This is especially useful for large websites where tracking the status and quality of every page is challenging.

» Get perfectly SEO'd pages out of the box with Entail's no-code page builder.

Why does Google need to crawl your website so often?

Google can crawl a website multiple times daily, often revisiting the same pages repeatedly. This prevents manipulation and ensures that your content remains trustworthy and unchanged.

In the past, website owners could manipulate search results by publishing a page on a popular topic, getting traffic, and then replacing the content with unrelated or potentially harmful material. For example, someone might create a page about hair care products, attract visitors, and then switch the content to promote gambling or scams.

Crawl Stats report metrics and what they mean

Google Search Console's Crawl Stats report includes several key metrics. Let's take a closer look at each.

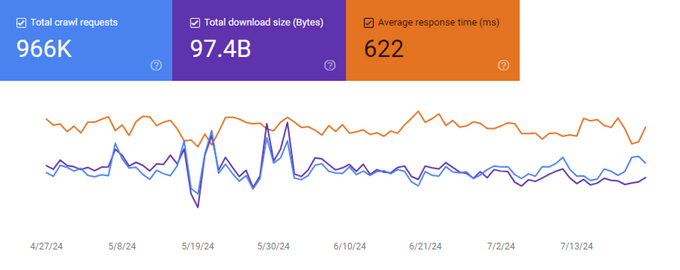

Total crawl requests

"Total crawl requests" indicate how often Google crawls your site. The more pages you have, the more Google will crawl your website. More frequent crawls also suggest Google values your site.

However, if many of your pages lack value, Google may reduce crawling and move them to the "crawled – not indexed" or "discovered – not indexed" status.

» Find out why Google isn't indexing your website.

Total download size

The "total download size" section in the Crawl Stats report shows how much data Google downloads from your website during its crawls. This includes all the resources on your site, such as HTML, images, CSS, and script files.

Average response time

"Average response time" shows how quickly your site responds to Google's requests in milliseconds. This is an indication of your website's health and page speed, which are important factors for SEO. A good response time suggests your website performs well.

» Create lightning-fast pages with Entail's page builder—no dev work required.

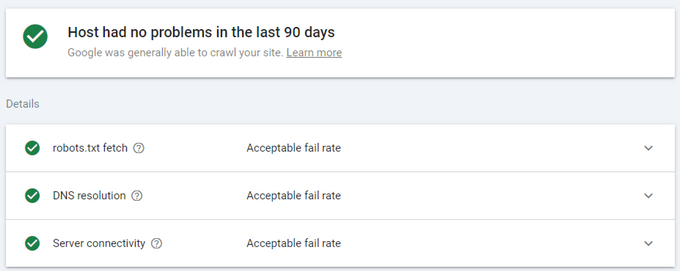

Hosts

The "hosts" section in the Crawl Stats report tells you whether Google encountered any availability issues while crawling your site. Google considers three factors for this: robots.txt fetch, DNS resolution, and server connectivity.

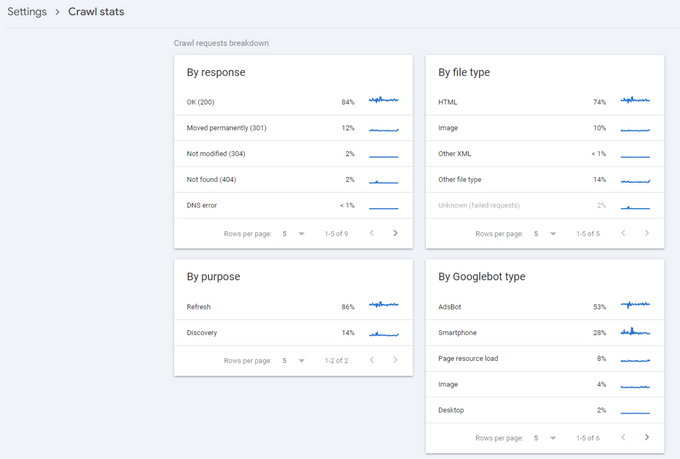

Crawl requests breakdown

The "crawl requests breakdown" section helps you understand how Google sees your site and identifies potential issues affecting your SEO. This section is further broken down into four categories:

- By response

- By purpose

- By file type

- By Googlebot type

By response

Here, you can see the types of responses Google received when crawling your pages. The following statuses are harmless and don't require any action.

- OK (200): These pages are easily accessible to Google and function properly. You should aim to have over 80% of your pages in this status.

- Moved permanently (301): This shows how many of your pages have a 301 redirect. While this isn't an issue, Google doesn't like pages changing too much. Try to avoid unnecessary redirects.

- Moved temporarily (302): These pages have a 302 redirect. If this is intentional, there's no need to do anything.

- Not modified (304): Resources on these pages haven't changed since the previous crawl request. This doesn't need fixing; it simply tells the crawler to use the cached version instead of downloading data again.

The next few statuses prevent Google from accessing your content, which is bad news for SEO. It's best to solve these issues and ask Google to index your pages as soon as possible.

- Not found (404): Google tried to reach a page that doesn't exist anymore. If this is unintentional, fix it. For permanently moved pages, use a 301 redirect. If the page has temporarily moved, apply a 302 redirect. Finally, for permanently deleted pages, use a 410 redirect.

- Robots.txt not available: Google couldn't access your robots.txt file and will stop crawling your site until this changes.

- Unauthorized (401/407): Google was unable to provide the login details required to access these pages. To solve this issue, block them in your robots.txt file.

- Server error (5XX): Google couldn't access the page due to a server error, resulting in an abandoned crawl request.

- Other client error (4XX): This refers to any other client-side 4xx error not included in this list.

- DNS unresponsive: Google was unable to reach your website because the Domain Name System (DNS) server didn't respond.

- DNS error: Google encountered an issue while trying to resolve your website's domain name to an IP address.

- Fetch error: Google couldn't retrieve the page due to server issues or other problems preventing the page from loading.

- Pages could not be reached: The request never reached the server, resulting in an error retrieving the page. These requests won't show up in your server logs.

- Page timeout: Google tried to load a page on your site, but the server took too long to respond, causing the request to time out.

- Redirect error: Google encountered an issue when following a redirect on your site. This usually occurs due to a redirect loop, empty redirects, or too many redirects.

- Other error: This refers to any error not included in this list.

» Get perfect on-page SEO with Entail's no-code page builder.

By purpose

The Crawl Stats report also breaks down Google's visits by purpose:

- Refresh: Google checked your existing pages to ensure the content hasn't changed. Static sites will typically have more refresh requests.

- Discovery: Google crawled the requested URL for the first time. News websites or sites that frequently publish new content typically have a higher percentage of discovery requests.

In my experience, a low discovery percentage (under 5%) suggests Google isn't finding new pages on your site. This isn't a good sign if you're regularly publishing content. Keep an eye on which pages Google indexes and make sure most crawl requests go to your important pages, which is usually the main domain. You want Google to find and index new pages as you publish them.

» Struggling with unindexed pages? Book a call with an SEO expert.

By file type

The "by file type" section in the Crawl Stats report shows the different types of files that Googlebot has crawled on your website, such as HTML, images, CSS, JavaScript, and videos.

While this section doesn't hold much weight in terms of SEO, it helps you understand which file types make up the bulk of Google's crawl activity and offers insights into how Googlebot accesses and indexes different content types on your site.

By Googlebot type

In the "by Googlebot type" section of the Crawl Stats report, you can find the different types of crawlers or user agents that have made crawl requests to your website. These crawlers, which include smartphone, desktop, image, video, AdsBot, etc., are designed to simulate user experiences on various devices.

Monitor crawl stats for better site health and SEO

While the Crawl Stats report may not directly correlate with your site's traffic, it's an invaluable tool for identifying and fixing issues that can impact your site's crawlability and SEO performance. By closely monitoring the report and working to get good response codes, you're setting the stage for a healthy website and improved SEO.

» Chat with us to understand and solve issues on your Crawl Stats report.