Are llms.txt files the missing link in AI-powered SEO?

llms.txt is a proposed standard for helping AI/LLMs better understand and use the content on your website. But with little support from major LLM providers and larger websites, its impact is still very limited.

Updated August 19, 2025

AI Summary

As AI continues to redefine search, website owners are looking for ways to help large language models (LLMs) better understand what’s on their sites. But complex HTML pages with navigation, ads, and JavaScript make it difficult for LLMs to find and understand valuable information, according to Australian technologist Jeremy Howard.

Enter llms.txt, Howard's proposed standard for helping AI/LLMs access and interpret your content. While it’s gaining traction in some corners of the web, there’s still a lot of confusion about what it actually does, whether it works, and if it’s worth implementing.

In this article, we’ll break down llms.txt, look at who’s using it, and explore whether it’s a meaningful step toward guiding AI—or just a well-intentioned idea that won’t stick.

Key takeaways

- llms.txt is a proposed file format to help LLMs better understand website content.

- It’s easy to create and experiment with, but no major LLMs currently support it.

- Most SEOs are skeptical, and adoption is limited to a few smaller sites.

- Even if llms.txt doesn’t become standard, making content AI-friendly is a growing priority.

What is llms.txt?

llms.txt is a proposed (i.e., unofficial) standard introduced by Jeremy Howard to help LLMs understand website content more easily. It’s a simple text file, much like robots.txt or sitemap.xml, that site owners can place in their root directory to provide LLMs with a clear, concise guide to the most important or relevant parts of their site.

The idea is to make complex web pages easier for AI to process by highlighting essential information and filtering out noise like navigation menus, ads, or scripts.

Note: As of now, no major LLMs officially support llms.txt. While companies like Anthropic have published their own versions, they haven’t confirmed that their crawlers actually use the file, which means llms.txt isn't very useful at this stage.

» Learn about Google's AI Overviews and how to optimize for them.

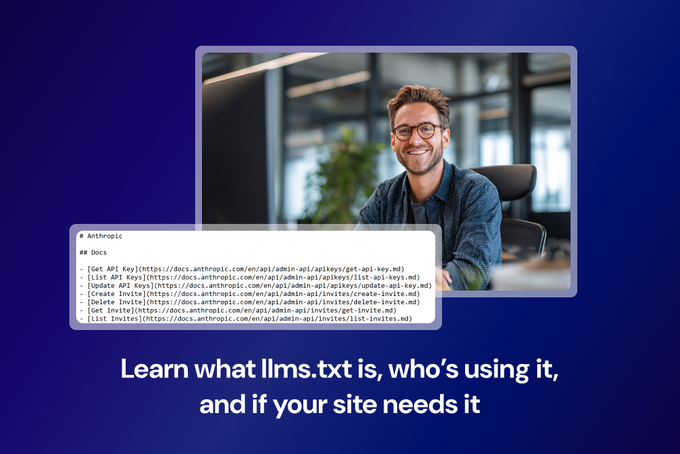

What does an llms.txt file look like?

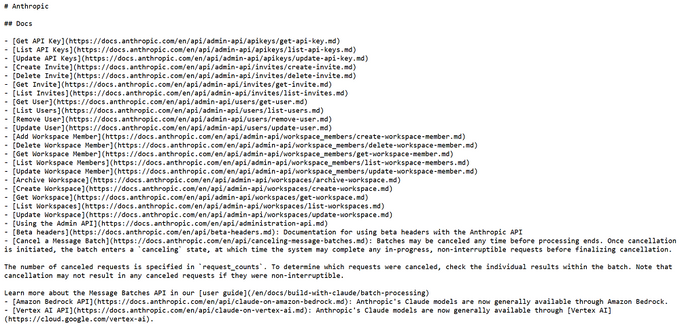

While llms.txt isn’t widely adopted yet, there are a few real-world examples that offer a glimpse into how it should work. For example, Anthropic has published its llms.txt file. Here's what it looks like:

You can browse more examples from over 600 other sites in the llms.txt directory.

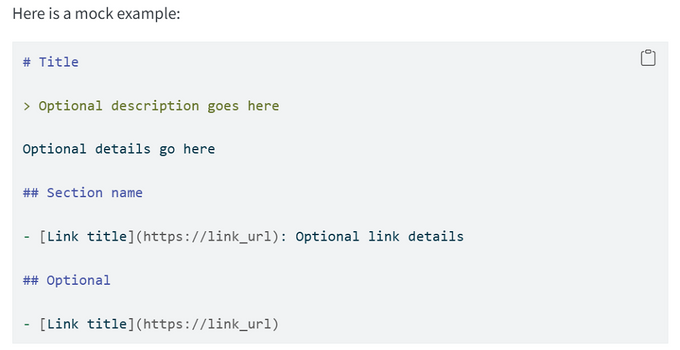

If you want to create your own llms.txt file, here’s how Howard recommends doing it:

- Use a Markdown file.

- Add an H1 heading with the name of your site or project (# Title). This is the only required step.

- Include a short blockquote (>) summary to provide context for the rest of the file.

- Add short additional sections with more detail about the project.

- Use H2s (## Section name) to organize your content into logical sections.

- Under each H2, provide a Markdown list of important links (- [Link Title](https://yourwebsite.com/page): Details) with short descriptions.

- Add a section titled "## Optional" for links that can be skipped if space is limited.

- Host the file at your root domain (yourdomain.com/llms.txt).

Here's the mock example of this format from Howard’s original proposal:

SEE ALSO: Should you change your SEO content strategy because of AI?

What's the difference between llms.txt and robots.txt?

llms.txt and robots.txt might seem similar at first glance, but they're actually quite different. In short, robots.txt is about exclusion, while llms.txt is about curation.

Let's explore how they differ in more detail:

- Purpose: Robots.txt is designed to tell search engine crawlers which parts of a site they can or can't access for indexing. llms.txt, on the other hand, aims to help LLMs understand your content by pointing them to high-value, AI-friendly resources.

- Audience: Robots.txt is meant for traditional search engines like Google and Bing. llms.txt targets LLMs and AI agents like ChatGPT or Claude, which aren't focused on indexing but on comprehension and content generation.

- Format: Robots.txt uses a plain text format with specific directives (like Disallow: or Allow:). llms.txt is written in Markdown, making it easier for LLMs to parse while still being easily readable to humans. It includes headings, lists, and hyperlinks to guide models toward important content.

- Function: Robots.txt controls access. It’s more about permission. llms.txt is about guidance. It suggests what content LLMs should focus on to understand your site more effectively.

» Find out why your website isn't getting indexed and how to fix it.

Are websites using llms.txt?

Some are—but not many, and certainly not the big ones. A few smaller sites have added llms.txt files as an experiment, but there’s no widespread adoption.

Again, none of the major LLMs currently recognize or use llms.txt as part of their crawling protocol. Even Anthropic, which publishes its own llms.txt file, hasn’t confirmed that its models read or follow it, making the format more symbolic than functional for now.

The SEO community has been openly skeptical.

For example, here's what SEO consultant Mike Friedman had to say:

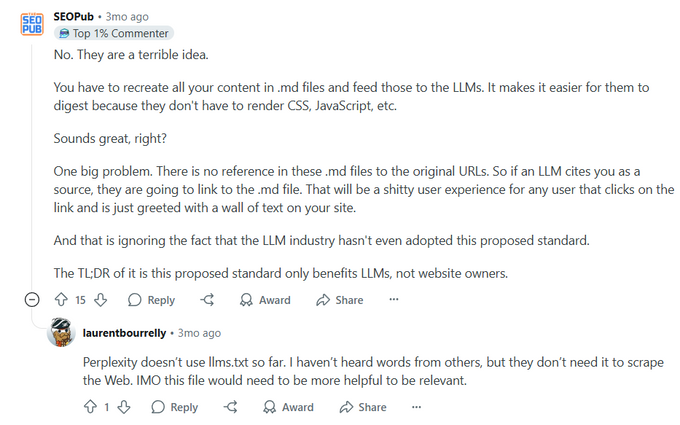

And here's the top comment from an r/SEO thread asking if llms.txt is helpful:

In a more lighthearted take, Chris Spann, senior technical SEO at Lumar (formerly Deepcrawl), remarked:

So, the general sentiment is clear: most SEOs aren't too fond of llms.txt and see it as unnecessary and unproven.

Here's what Google’s John Mueller had to say on Reddit:

That said, not everyone is dismissing it entirely. Amy-Leigh Idas, SEO and content strategy expert at Entail AI, notes:

While llms.txt has sparked discussion, it hasn’t sparked adoption. Until LLMs actually start using it—and the industry sees clear value—it’s likely to remain a niche experiment rather than a new standard.

» Find out if there's a difference between GEO, AIO, and LLMO.

Will llms.txt become standard?

No, llms.txt probably won't become standard anytime soon. While the idea behind it is solid, there just isn’t enough support yet from the major players like Google or OpenAI. Some smaller sites are experimenting with it, and it fits nicely into the growing world of GEO, where the goal is to show up in AI answers. For websites that already use Markdown or have well-structured content, it’s a low-effort way to test the waters.

That said, it’s still early days. No major LLMs are actually using llms.txt, and keeping one updated could be a hassle for larger sites. For now, think of it as an optional extra, not a must-have. But even if llms.txt doesn’t take off, the bigger idea behind it—making your content easier for AI to read and use—is only going to get more important.

» Chat with us about how to optimize your website for AI/LLMs.